Founded in July 2023 in Hangzhou, China, DeepSeek AI is an artificial intelligence company that aims to make Artificial General Intelligence (AGI) a reality. Led by co-founder Liang Wenfeng, a high-profile hedge fund, DeepSeek is rapidly becoming a strong force in the global AI landscape. With an emphasis on open source, cost-effectiveness, and multilingual support (English and Chinese), the company directly challenges Western AI companies such as OpenAI, Anthropic, and Meta AI.

In January 2025, the rapid success of the DeepSeek processor led to it being used in the United States as well. The app made headlines when it overtook ChatGPT on the App Store, which led to an 18% drop in Nvidia’s stock price and a loss of $600 billion in market value. It was clear that they were not only trying to catch up with DeepSeek, but they were also making a difference.

You are going to learn in this article about,

- DeepSeek AI

- Deepseek AI’s Model Lineup

- DeepSeek AI Tutorials and User Guides

- How to Use Deepseek AI API

- DeepSeek AI Real-World Applications

- Alternatives to Deepseek AI (Comparison Snapshot)

DeepSeek AI

The success of DeepSeek AI is already being felt in the global market. In January 2025, the news rocked the entire tech industry when the DeepSec app overtook ChatGPT as the #1 free app in the US Apple App Store. NVIDIA’s market cap has plummeted by $600 billion, and the share prices of other AI-related companies have fluctuated, highlighting the disruptive potential of cost-effective models that have become mainstream.

More than a discovery story, DeepSeek is changing the broader economic dynamics of AI, forcing companies to rethink value, access, and openness. Governments and organizations in Asia, Africa, and Latin America are bypassing existing Western AI providers and seeking partnerships with DeepSeek or similar providers.

Deepseek AI’s Model Lineup: Innovation on Steroids

Deepseek’s rapid-fire releases of Large Language Models (LLMs) offer a glimpse into its innovation-first philosophy. Here’s a breakdown:

✅ 1. DeepSeek Coder (Nov 2023)

- Purpose: Code generation & understanding

- Data Mix: 87% code, 13% natural language

- Languages: English & Chinese

- Parameters: 1.3B to 33B

- Highlights:

- 16K context window

- Beats CodeLlama-34B on HumanEval benchmarks

- Perfect for large project-level code tasks

✅ 2. DeepSeek-LLM (Nov 2023)

- Purpose: General-purpose chatbot

- Parameters: 7B and 67B

- Training Tokens: 2 trillion

- Performance: Outperforms LLaMA 2 in benchmarks

- License: Deepseek License (source-available)

✅ 3. DeepSeek-MoE (Jan 2024)

- Type: Mixture-of-Experts (MoE)

- Size: 16B parameters, with 2.7B active per token

- Context Window: 4K

- Efficiency: Comparable to 16B dense models but more efficient

✅ 4. DeepSeek-V3 (Dec 2024, Updated Mar 2025)

- Type: MoE with Multi-head Latent Attention (MLA)

- Parameters: 671B total, 37B active

- Training Tokens: 14.8 trillion

- Compute: Only 2.78M H800 GPU hours

- Benchmark: On par with GPT-4o and Claude 3.5 Sonnet

- Update (0324): Better reasoning and code understanding

- Availability: Hugging Face, GitHub

💰 Training Cost: Just $6 million—94% cheaper than GPT-4 ($100M)

✅ 5. DeepSeek R1 (Jan 2025)

- Specialty: Reasoning tasks

- Beats OpenAI o1 on AIME/MATH benchmarks

- Training Method: Reinforcement Learning (GRPO)

- License: MIT (fully open-source)

- API Access: deepseek-reasoner

- R1-Lite: Available from Nov 2024

Tutorials and User Guides – Getting Started with Deepseek AI

Whether you’re a developer, researcher, or enthusiast, Deepseek AI makes it easy to integrate powerful LLMs into your apps and workflows. Here’s a step-by-step guide to help you get started:

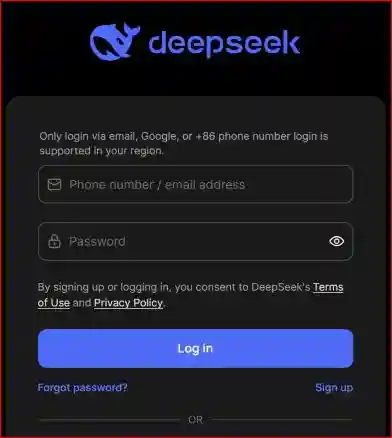

Step 1: Get Your API Key

To begin using Deepseek’s cloud models:

- Visit the Deepseek Platform

- Sign up or log in with your credentials

- Apply for an API key under the API Access section

This key allows secure access to Deepseek’s hosted models via RESTful endpoints.

Step 2: Choose the Right API Endpoint

Use the appropriate base URL:

https://api.deepseek.com

For compatibility with OpenAI-style SDKs or libraries, use:

https://api.deepseek.com/v1

Step 3: Select a Model

You can choose between several models depending on your use case:

| Model Name | Description |

|---|---|

deepseek-chat | General-purpose model (DeepSeek-V3) |

deepseek-coder | Code generation & reasoning model |

deepseek-reasoner | Multimodal & logic reasoning (DeepSeek-R1) |

Step 4: Example – Chat Completion with Python

Here’s how to make a basic chat request using Python’s requests library:

pythonCopyEditimport requests

url = "https://api.deepseek.com/v1/chat/completions"

headers = {

"Authorization": "Bearer YOUR_API_KEY",

"Content-Type": "application/json"

}

payload = {

"model": "deepseek-chat",

"messages": [

{"role": "user", "content": "What is the future of AGI?"}

],

"temperature": 0.7

}

response = requests.post(url, headers=headers, json=payload)

print(response.json())

Local Deployment (Advanced)

Prefer local inference? You can deploy DeepSeek-V3 using various engines:

| Inference Engine | Hardware Supported |

|---|---|

vLLM | NVIDIA GPUs |

LMDeploy | AMD & NVIDIA GPUs |

SGLang | Optimized for multi-threaded use |

| Huawei Ascend | Huawei NPUs (via Ascend SDK) |

Deepseek’s API is OpenAI-compatible, which means tools like LangChain, llama-index, and Open Interpreter can work with minimal modification—just change the base_url and api_key.

How to Use Deepseek AI: Getting Started with the API

Developers can access Deepseek AI via an API compatible with OpenAI’s SDK. Here’s a basic Python example:

pythonCopyEditimport requests

api_key = "YOUR_API_KEY"

url = "https://api.deepseek.com/chat/completions"

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {api_key}"

}

data = {

"model": "deepseek-chat",

"messages": [{"role": "user", "content": "Hello, how are you?"}]

}

response = requests.post(url, headers=headers, json=data)

print(response.json())

For full tutorials and local deployments (via SGLang, LMDeploy, vLLM), visit their GitHub repository or Deepseek API Docs.

Real-World Applications: Where Deepseek AI Is Making Waves

Deepseek’s models are being deployed across automobiles, smartphones, appliances, healthcare, and government in China. Here’s how:

| Sector | Huawei’s Xiaoyi uses DeepSeek-R1; also adopted by Oppo, Vivo, Xiaomi, and Honor | Details |

|---|---|---|

| Automobiles | Huawei’s Xiaoyi uses DeepSeek-R1, also adopted by Oppo, Vivo, Xiaomi, and Honor | Voice assistants, AI navigation, and natural language controls |

| Smartphones | AI-enhanced assistants, content generation | Document generation, admin work, and smart policing |

| Home Appliances | Smart controls in ACs, fridges, and vacuums | Midea’s ACs adjust based on “I feel cold”; integrated in smart appliances |

| Healthcare | Diagnostics, imaging, prescriptions (controversial) | Used by 100+ hospitals; some regions raised warnings (e.g., Hunan province banned AI Rx) |

| Government | Document generation, admin work, smart policing | Document generation, admin work, and smart policing |

Alternatives to Deepseek AI

While DeepSeek AI offers powerful open-source and cloud-based Large Language Models (LLMs), there are other competitive, cost-effective, and accessible options in this ecosystem. Here are some of the best options to consider:

1. LLaMA 3.3 by Meta AI

- Parameters: 70 Billion

- Multilingual: Supports 8 languages, including English and Hindi

- Use Cases: Ideal for general-purpose LLM applications like chatbots, summarization, and translation

- License: Open-source

- Highlight: Backed by Meta’s research, it’s among the top in community benchmarks

2. Qwen2.5-Max by Alibaba Cloud

- Training: Pretrained on 20+ trillion tokens

- Architecture: Mixture of Experts (MoE)

- Context Window: Up to 32K tokens

- License: Apache 2.0 (Open Source)

- Deployment: Available via Hugging Face and Alibaba Cloud API

- Use Case: Enterprise-ready, scalable for document processing and reasoning

3. Mistral AI’s Open Models

- Reputation: Known for fast, efficient models with competitive performance

- Release Style: Lightweight, high-quality open-source checkpoints

- Model Examples:

Mistral-7B,Mixtral, and others - Platform: Available via Hugging Face, GitHub, and Ollama

4. Hugging Face Community Models

- Platform: Central hub for exploring thousands of open-source LLMs

- Features: Includes hosted inference endpoints, compatibility with

transformers, and model cards - Notable Models: Bloom, Falcon, Phi-2, LLaVA, and more

- Ease of Use: One-click deployment, Colab-ready, and free-tier options

Deepseek AI Comparison Snapshot

| Feature | Deepseek AI | LLaMA 3.3 | Qwen2.5-Max | Mistral AI | Hugging Face |

|---|---|---|---|---|---|

| Open Source | ✅ | ✅ | ✅ | ✅ | ✅ |

| API Access | ✅ | ❌ | ✅ | ❌ | ✅ |

| Token Context Window | 32K+ | ~8K (varies) | 32K | 8K–32K (varies) | Varies |

| Multilingual Support | Moderate | High | High | Medium | Varies |

| Best Use Case | Reasoning, Coding | Multilingual NLP | Enterprise NLP | Lightweight Apps | Model Exploration |

These options are not only complements to DeepSeek but also potential upgrades depending on your project’s needs – whether it’s speed, multilingual support, licensing flexibility, or open infrastructure.

Conclusion

Supported by the renowned open-source LLMA, the rapid development of DeepSeek AI has been widely adopted across industries, especially in China. Their models, such as DeepSeek-V3 and R1, compete with global leaders and drive innovation with capabilities. However, the situation has been complicated by controversies such as a possible ban from the US government and health issues. With plans to accelerate releases such as R2 and options such as LLMA and Gwen, DeepSeek’s role in democratizing AI is significant.

Comments